using SparseArrays: spdiagm

finiteDiff(n) = spdiagm(0 => ones(n), +1 => -ones(n-1))[1:n-1,:]

finiteDiff(4)3×4 SparseArrays.SparseMatrixCSC{Float64, Int64} with 6 stored entries:

1.0 -1.0 ⋅ ⋅

⋅ 1.0 -1.0 ⋅

⋅ ⋅ 1.0 -1.0CPSC 406 – Computational Optimization

\[ \def\argmin{\operatorname*{argmin}} \def\Ball{\mathbf{B}} \def\bmat#1{\begin{bmatrix}#1\end{bmatrix}} \def\Diag{\mathbf{Diag}} \def\half{\tfrac12} \def\int{\mathop{\rm int}} \def\ip#1{\langle #1 \rangle} \def\maxim{\mathop{\hbox{\rm maximize}}} \def\maximize#1{\displaystyle\maxim_{#1}} \def\minim{\mathop{\hbox{\rm minimize}}} \def\minimize#1{\displaystyle\minim_{#1}} \def\norm#1{\|#1\|} \def\Null{{\mathbf{null}}} \def\proj{\mathbf{proj}} \def\R{\mathbb R} \def\Re{\mathbb R} \def\Rn{\R^n} \def\rank{\mathbf{rank}} \def\range{{\mathbf{range}}} \def\sign{{\mathbf{sign}}} \def\span{{\mathbf{span}}} \def\st{\hbox{\rm subject to}} \def\T{^\intercal} \def\textt#1{\quad\text{#1}\quad} \def\trace{\mathbf{trace}} \]

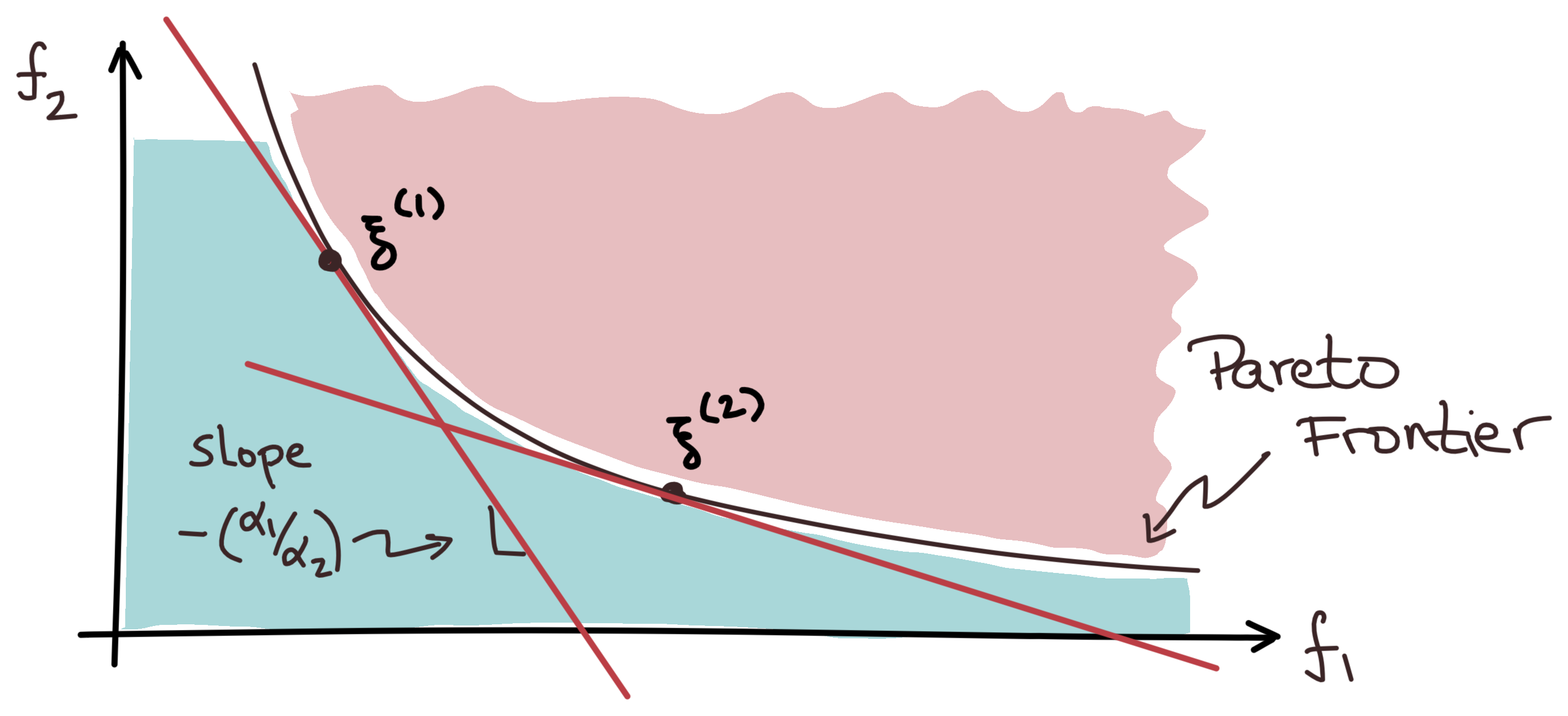

Many problems need to balance competing objectives, eg,

\[ x^{*} = \argmin_x f_1(x) \quad\Longrightarrow\quad f_1(x^{*}) \leq f_2(x^{*}) \]

\(\xi^{(i)} := \{f_1(x^{(i)}),\ f_2(x^{(i)})\}\)

Common approach is to weight the sum of objectives: \[ \min_x \ \alpha_1 f_1(x) + \alpha_2 f_2(x) \]

\[ \argmin_x \{\, \alpha_1 f_1(x) + \alpha_2 f_2(x)\} = \argmin_x \{\, f_1(x) + \tfrac{\alpha_2}{\alpha_1} f_2(x)\} \]

\[ \min_x\ \tfrac12\|Ax - b\|^2 + \tfrac12\lambda\|Dx\|^2 \]

\[ \|Ax-b\|^2 + \lambda\|Dx\|^2 = \left\|\begin{bmatrix}A\\\sqrt\lambda D\end{bmatrix}x - \begin{bmatrix}b\\0\end{bmatrix}\right\|^2 \]

\[ (A\T A + \lambda D\T D)x = A\T b \]

Given tall \(m\times n\) matrix \(A\) with SVD \(A = U\Sigma V^T\), where \(\Sigma\) is the diagonal matrix of singular values \(\sigma_1 \ge \sigma_2 \ge \cdots \ge \sigma_{n} \ge 0.\) Let \[ M \;=\; \begin{bmatrix} A \\[2pt] \sqrt{\lambda}\,I \end{bmatrix} \] For \(i=1,\dots,n\), are the singular values of \(M\) are

Hint: Recall that the singular values of \(M\) are the square roots of the eigenvalues of \(M^TM\).

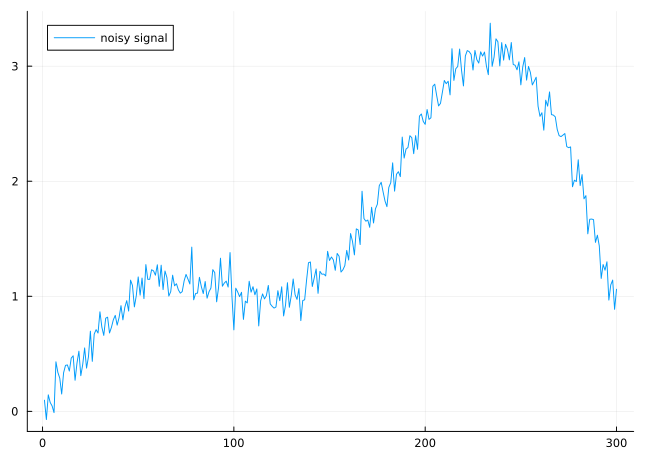

observe noisy measurements \(y\) of a signal \[ b = \hat x + \eta \textt{where} \left\{\begin{aligned} x^\natural&\in\Rn\ \text{is true signal} \\\eta &\in\R^n\ \text{is noise} \end{aligned} \right. \]

Naive least squares fits noise perfectly: \(b\) solves \(\min_x\|x - b\|^2\)

assumption: \(x^\natural\) is smooth \(\quad\Longrightarrow\quad\) balance fidelity to data against smoothness

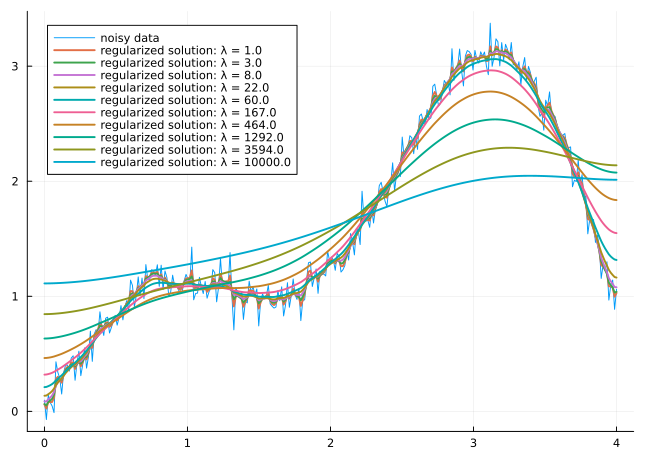

\[ \min_x\ \underbrace{\|x - b\|^2}_{f_1(x)} +\underbrace{\lambda\sum_{i=1}^{n-1}\|x_i-x_{i+1}\|^2}_{f_2(x)} \]

\(D\) is sparse: only \(2n-2\) nonzero entries (2 per row)

\[ \begin{aligned} \min_x\,&\{f_1(x) + \lambda f_2(x)\,\}\\ \min_x\,&\{f_1(x)\mid f_2(x) \leq τ\} \\ \min_x\,&\{f_2(x)\mid f_1(x) \leq \sigma\} \end{aligned} \]

# Generate a small random overdetermined system

Random.seed!(123)

m, n = 9, 3

A = randn(m, n)

b = randn(m)

# Solve min ||Ax-b||^2 + λ||x||^2

function solveTikhonov(A, b, λ)

x = Variable(n)

problem = minimize(sumsquares(A*x - b) + λ*sumsquares(x))

solve!(problem, ECOS.Optimizer(), verbose=false, silent_solver=true)

x = evaluate(x)

return norm(A*x-b), norm(x)

end

# Solve min ||Ax-b||^2 s.t. ||x||^2 ≤ τ

function solveConstrained(A, b, τ)

x = Variable(n)

problem = minimize(sumsquares(A*x - b), sumsquares(x)≤τ)

solve!(problem, ECOS.Optimizer(), verbose=false, silent_solver=true)

x = evaluate(x)

return norm(A*x-b), norm(x)

end

# Solve min ||x||^2 s.t. ||Ax-b||^2 ≤ σ

function solveFlipped(A, b, σ)

x = Variable(n)

p = minimize(sumsquares(x), sumsquares(A*x - b) ≤ σ)

solve!(p, ECOS.Optimizer(), verbose=false, silent_solver=true)

x = evaluate(x)

return norm(A*x-b), norm(x)

end

# Plot ||x|| vs. ||Ax-b|| for various values of λ, τ, and σ

prange = LogRange(1e-2, 1e2, 50)

p1 = map(λ -> solveTikhonov(A, b, λ), prange)

p2 = map(τ -> solveConstrained(A, b, τ), prange)

p3 = map(σ -> solveFlipped(A, b, σ), range(1e-0, 1e1, 50))

p = plot(xlabel="||x||", ylabel="||Ax-b||", ticks=false)

scatter!(p, [pair[2] for pair in p1], [pair[1] for pair in p1], label="Tikhonov", mark=:circle)

scatter!(p, [pair[2] for pair in p2], [pair[1] for pair in p2], label="Constrained", mark=:square)

scatter!(p, [pair[2] for pair in p3], [pair[1] for pair in p3], label="Flipped", mark=:diamond)